Job Monitoring

Job monitoring system

When submitting jobs to the batch system, users need to specify how many CPUs, GPUs and how much RAM their job requires.

But requesting those resources does not necessarily mean that the job actually uses those resources. Users have commonly asked the Spartan admins to help them monitor the resources usage of their jobs. We have developed a system that helps the users monitor their running jobs.

This is in addition to the simple aggregate summary that can be invoked if the email options are included in a job submission script. i.e,

# Specify your email address to be notified of progress.

# Includes job usage statistics

#SBATCH --mail-user=myemail@example.edu.au

#SBATCH --mail-type=ALL

How does the system work?

At regular 15 second intervals, CPU, GPU usage and other relevant metrics are collected from each Spartan worker node and forwarded to a central database. The system provides a command line tool that queries this list of stored metrics, analyzes them and displays the results in an easy-to-understand format.

Using the job monitoring system

The my-job-stats tool can be used to monitor a given jobID.

-

Log into Spartan via SSH

-

Run

my-job-stats -j jobID -a, with the jobID replaced by an actual job number (eg: 21453210)

You can see the possible options by running

$ my-job-stats -h

Usage: ./my-job-stats [Options] -j <Jobid> [-a|-c|-d|-s|-v]

Options:

-a --overall Display the overall CPU/GPU usage metrics for a running job since it started

-c --current Display the current CPU/GPU usage metrics for a running job

-h --help Help menu

-j --jobid Job id

-n --no-color Do not colorize the usage stats

-s --sleep Only to be used in job submit scripts to ensure that runtime of the job is atleast 30 secs before listing metrics.

Without this option, very short jobs with less than 30 secs runtime may return invalid resource usage stats

-v --verbose Verbose mode

-d --debug Debug mode to dump raw slurm job data

Modes of operation

my-job-stats allow you to show the instantaneous usage, or the overall usage since the job began

Instantaneous mode: This mode shows the usage of each CPU/GPU based on the metrics collected in the last 30 seconds and helps identify the most recent resource usage pattern. Eg: my-job-stats -j jobID -c

Overall mode: This mode shows the overall usage of each CPU/GPU allocated to a given job, since it started running. Eg: Run my-job-stats -j jobID -a

You will get most information out of the overall mode, as jobs normally change how they use CPUs,GPUs,RAM during the course of their run.

Understanding the my-job-stats output

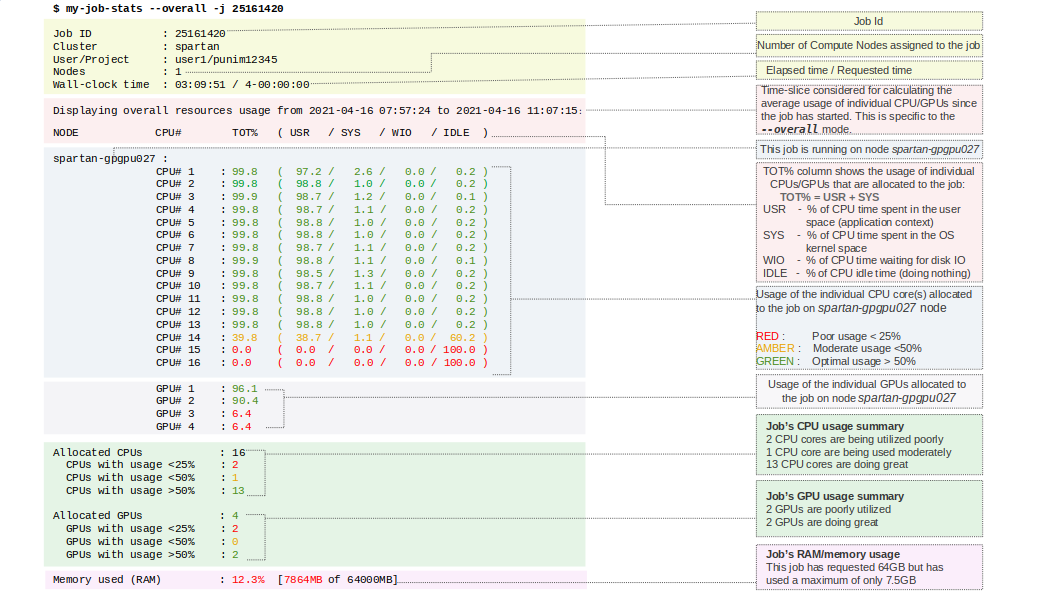

The following example shows a running job's overall resource usage details since it has started, with each field explained. This job has requested 16 CPUs, 4 GPUs, ~64GB RAM and is running on spartan-gpgpu027. The TOT% column shows the usage values of each individual CPU/GPU allocated to the job.

Limitations

The my-job-stats-tool can display the individual CPUs/GPUs usage for running jobs only. For completed/failed jobs, it will display the overall resources utilization. We will extend the tool to display individual CPUs/GPUs usage levels for completed jobs in a future upgrade.

$ my-job-stats -j 25168305_38

Job ID: 25168343

Array Job ID: 25168305_38

Cluster: spartan

User/Group: emakalic/punim0316

State: COMPLETED (exit code 0)

Nodes: 1

Cores per node: 8

CPU Utilized: 05:23:48

CPU Efficiency: 12.48% of 1-19:15:28 core-walltime

Job Wall-clock time: 05:24:26

Memory Utilized: 1.42 GB

Memory Efficiency: 4.53% of 31.25 GB

If you want to automatically capture the detailed usage stats before a job completes, try running the my-job-stats tool by adding the following snippet at the end of your job script .

An example job script would like:#!/bin/bash

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=16

#Other stuff here..............

#..............

#start your app here

##Log this job's resource usage stats###

my-job-stats -a -n -s

##

NOTE: If you want the job resource usage stats logged to a separate file instead of the job output file, use "my-job-stats -a -j $JOBID > ${JOBID}.stats" instead. That will create a separate file with ".stats" extension.

Examples

Example 1: Fixing jobs that requests multiple CPUs, but only use 1 CPU

The following Gaussian job requests 1 task with 16 CPUs per task (threads).

$ cat sub.sh

#!/bin/bash

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=6

#SBATCH --time=0-100:00:00

#SBATCH --mem=50G

#SBATCH --partition=sapphire

module purge

module load NVHPC/22.11-CUDA-11.7.0

module load Gaussian/g16c01-CUDA-11.7.0

source /etc/profile

g16root=/data/gpfs/admin/hpcadmin/naren/user_jobs/gaussian/stuff

GAUSS_SCRDIR=/data/gpfs/admin/hpcadmin/naren/user_jobs/gaussian/scratch

export g16root GAUSS_SCRDIR

source $g16root/g16/bsd/g16.profile

[ -d "$GAUSS_SCRDIR" ] || mkdir "$GAUSS_SCRDIR"

g16 $1

my-job-stats -a -j 25215023

Job ID : 25215023

Cluster : spartan

User/Project : naren/hpcadmin

Nodes : 1

Wall-clock time : 00:02:21 / 4-04:00:00

Displaying overall resources usage from 2021-04-19 09:04:15 to 2021-04-19 09:06:36:

NODE CPU# TOT% ( USR / SYS / WIO / IDLE )

spartan-bm053 :

CPU# 1 : 0.1 ( 0.0 / 0.0 / 0.0 / 99.9 )

CPU# 2 : 0.5 ( 0.4 / 0.1 / 0.0 / 99.5 )

CPU# 3 : 0.1 ( 0.0 / 0.1 / 0.0 / 99.9 )

CPU# 4 : 14.1 ( 14.1 / 0.1 / 0.0 / 85.9 )

CPU# 5 : 82.8 ( 82.6 / 0.1 / 0.0 / 17.2 )

CPU# 6 : 0.1 ( 0.0 / 0.1 / 0.0 / 99.9 )

Allocated CPUs : 6

CPUs with usage <25% : 5

CPUs with usage <50% : 0

CPUs with usage >50% : 1

Memory used (RAM) : 0.3% [177MB of 51200MB]

Now let's check the latest usage stats (instantaneous mode) to confirm the application's behaviour.

$ my-job-stats -c -j 25215023

Job ID : 25215023

Cluster : spartan

User/Project : naren/hpcadmin

Nodes : 1

Wall-clock time : 00:02:28 / 4-04:00:00

Displaying latest resources usage:

NODE CPU# TOT% ( USR / SYS / WIO / IDLE )

spartan-bm053 :

CPU# 1 : 0.0 ( 0.0 / 0.0 / 0.0 / 100.0 )

CPU# 2 : 0.0 ( 0.0 / 0.0 / 0.0 / 100.0 )

CPU# 3 : 0.3 ( 0.1 / 0.2 / 0.0 / 99.7 )

CPU# 4 : 0.0 ( 0.0 / 0.0 / 0.0 / 100.0 )

CPU# 5 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

CPU# 6 : 0.0 ( 0.0 / 0.0 / 0.0 / 100.0 )

Allocated CPUs : 6

CPUs with usage <25% : 5

CPUs with usage <50% : 0

CPUs with usage >50% : 1

Memory used (RAM) : 0.3% [177MB of 51200MB]

Most software applications need specific options/arguments to be explicitly mentioned, before they are able to exploit all the allocated CPUs.

In this case, the Gaussian documentation ( https://gaussian.com/equivs/ ) mentions the options (GAUSS_PDEF or %NProcShared) that are needed for using multiple CPUs. Let's modify the job sumbmission script by setting the environment variable GUASS_PDEF before calling the Gaussian binary.

| NOTE: The threading/parallelism options are application specific and hence will be different for each application. The application documentation will have the correct details. |

|---|

#!/bin/bash

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=6

#SBATCH --time=0-100:00:00

#SBATCH --mem=50G

#SBATCH --partition=sapphire

module purge

module load NVHPC/22.11-CUDA-11.7.0

module load Gaussian/g16c01-CUDA-11.7.0

source /etc/profile

g16root=/data/gpfs/admin/hpcadmin/naren/user_jobs/gaussian/stuff

GAUSS_SCRDIR=/data/gpfs/admin/hpcadmin/naren/user_jobs/gaussian/scratch

export g16root GAUSS_SCRDIR

source $g16root/g16/bsd/g16.profile

[ -d "$GAUSS_SCRDIR" ] || mkdir "$GAUSS_SCRDIR"

export GAUSS_PDEF=${SLURM_CPUS_PER_TASK}

g16 $1

The CPU usage looks much better with the modified job script.

$ sbatch -q hpcadmin sub.sh 01.gjf

Submitted batch job 25215847

$ ~naren/my-job-stats/my-job-stats -a -j 25215847

Job ID : 25215847

Cluster : spartan

User/Project : naren/hpcadmin

Nodes : 1

Wall-clock time : 00:00:41 / 4-04:00:00

Displaying overall resources usage from 2021-04-19 09:56:50 to 2021-04-19 09:57:31:

NODE CPU# TOT% ( USR / SYS / WIO / IDLE )

spartan-bm053 :

CPU# 1 : 76.5 ( 75.7 / 0.8 / 0.0 / 23.5 )

CPU# 2 : 76.7 ( 76.4 / 0.3 / 0.0 / 23.3 )

CPU# 3 : 76.4 ( 76.2 / 0.2 / 0.0 / 23.6 )

CPU# 4 : 76.5 ( 76.3 / 0.1 / 0.0 / 23.5 )

CPU# 5 : 76.4 ( 76.3 / 0.1 / 0.0 / 23.6 )

CPU# 6 : 79.2 ( 78.7 / 0.6 / 0.0 / 20.8 )

Allocated CPUs : 6

CPUs with usage <25% : 0

CPUs with usage <50% : 0

CPUs with usage >50% : 6

Memory used (RAM) : 1.2% [634MB of 51200MB]

-----------------

$ my-job-stats -c -j 25215847

Job ID : 25215847

Cluster : spartan

User/Project : naren/hpcadmin

Nodes : 1

Wall-clock time : 00:01:09 / 4-04:00:00

Displaying latest resources usage:

NODE CPU# TOT% ( USR / SYS / WIO / IDLE )

spartan-bm053 :

CPU# 1 : 100.0 ( 99.9 / 0.1 / 0.0 / 0.0 )

CPU# 2 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

CPU# 3 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

CPU# 4 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

CPU# 5 : 99.9 ( 99.9 / 0.0 / 0.0 / 0.1 )

CPU# 6 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

Allocated CPUs : 6

CPUs with usage <25% : 0

CPUs with usage <50% : 0

CPUs with usage >50% : 6

Memory used (RAM) : 1.2% [635MB of 51200MB]

--------------------------------------------

Example 2: Fixing jobs that requests multiple CPUs spread across different nodes

Unless an application is MPI (Message Passing Interface) enabled, it may not work on multiple Spartan compute nodes in parallel.

Infact most of the Spartan users' applications are only capable of running and using CPUs on a single node.

When such a non-MPI enabled job requests multiple CPUs, Slurm Job Scheduler may assign CPUs across multiple nodes.

In such cases, the CPUs on the first node are utilized by the application and the nodes on other CPUs will be idle.

For eg, the following output shows that the job is spawned on 2 nodes , but the CPUs on second node spartan-bm054 are not used.

$ my-job-stats -a -j 25216072

Job ID : 25216072

Cluster : spartan

User/Project : naren/hpcadmin

Nodes : 2

Wall-clock time : 00:00:30 / 4-04:00:00

Displaying latest resources usage:

NODE CPU# TOT% ( USR / SYS / WIO / IDLE )

spartan-bm053 :

CPU# 1 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

CPU# 2 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

CPU# 3 : 100.0 ( 100.0 / 0.0 / 0.0 / 0.0 )

CPU# 4 : 100.0 ( 99.6 / 0.4 / 0.0 / 0.0 )

spartan-bm054 :

CPU# 5 : 0.0 ( 0.0 / 0.0 / 0.0 / 100.0 )

CPU# 6 : 0.6 ( 0.2 / 0.4 / 0.0 / 99.4 )

CPU# 7 : 0.0 ( 0.0 / 0.0 / 0.0 / 100.0 )

CPU# 8 : 0.0 ( 0.0 / 0.0 / 0.0 / 100.0 )

Allocated CPUs : 8

CPUs with usage <25% : 4

CPUs with usage <50% : 0

CPUs with usage >50% : 4

Memory used (RAM) : 0.3% [273MB of 102400MB]

--------------------------------------------

If an application is not capable of running on multiple nodes, restrict it to run on 1 node in the job submission script by adding #SBATCH --nodes=1 to the job script.

If you are using Workflow Management Software (eg: Cromwell) for launching jobs on Spartan, you may have to modify the job launching options in the appropriate configuration files.

For example, the following Cromwell configuration snippet launches a job on only one node using the --nodes 1 option.

submit = """

sbatch -J ${job_name} -D ${cwd} --nodes 1 -o ${out} -e ${err} -t ${runtime_minutes} -p ${queue} \

${"-c " + cpus} \

--mem-per-cpu ${requested_memory_mb_per_core} \

--wrap "/bin/bash ${script}"

"""

For MPI enabled applications (eg: LAMMPS), you may want to run your start your application with "srun" inside your job script - eg: srun <APP NAME>

Real time monitoring

Once your job is running on the node, you can connect to the node that it is running on, via 2 methods. You can ssh to the node, or connect to the job, both from the login node.

SSH to job

Using squeue, you can find out which node your job is running on.

The job is running on spartan-bm083, so you can ssh to it from the login node while your job is running.

[scrosby@spartan-login1 ~]$ ssh spartan-bm083

Last login: Thu Aug 26 17:20:22 2021

[scrosby@spartan-bm083 ~]$

When you ssh to the node, if you have multiple jobs on the same node, your SSH session is randomly put into the container of one of your jobs running on the node.

Use srun to connect to job

Using srun, you can connect directly to the session of a job running on the worker node. Using the job ID, from the login node, you can do

which would give you a bash terminal inside of your job, or you could run a command directly e.g.